Monday, December 22, 2008

What Comes After Minds?

Brainspot

We can count up the number of parts, links, subparts, logical depth, and degrees of freedom of various complicated entities (a jumbo jet, rainforest, a star fish) and the final tally of components may near the total for a brain. Yet the function and results of those parts are way more complicated than the sum of the parts. When we begin to consider the multiple processes each part participates in, the complexity of the mind becomes more evident. Considered in the light of their behavior, living things outrank the inert in complexity, and smart things outrank dumb ones. We also have evidence for this claim in our efforts to manufacture complexity. Making a stone hammer is pretty easy. Making a horseless carriage more difficult. Making a synthetic organism more so. A human mind is yet more difficult to synthesize or recreate. We have not come close to achieving an artificial mind and some believe the complexity of the mind is so great that we will forever fail in that quest. Because of this difficulty and uncertainty, the mind is currently the paragon of complexity in creation.

If anything might rival the mind’s ultimate complexity, it would be the planetary biosphere. In its sheer mass and scale, the tangle of zillions of organisms and vast ecosystems in the biosphere trumps the 5 kilos of neurons and synapses in the brain – by miles. Yet we tend to assign greater complexity to the mind for two reasons. One, we think we understand how ecosystems work, although we can’t yet predict how they all work together. We have not conquered its planetary scale. On the other hand, we are baffled how the human mind works even in small regions. Scale is just one problem. Our mind parts are much more deeply entangled, reflective, recursive, and woven together into a unified whole than the biosphere. As a whole, the mind is a mystery still.

Two, the output of the biosphere is primarily more of itself. It will self-regulate and slowly evolve new species, but it has not produced new types of creation – except of course it produced human minds. But human minds have created all these other things, including miniature ecosystems and tiny biospheres, so we assign greater complexity to it.

This point was better said, more succinctly, by Emily Dickinson in her grenade of a poem.

The brain is wider than the sky,

For, put them side by side,

The one the other will contain,

With ease, and you, beside.

The asymmetry of compression is an important metric. The fact that the brain can contain an abstract of the biosphere, but the biosphere not contain an abstract of a human mind, suggests one is larger, or more complex, than the other.

While we have not yet made anything as complex as a human mind, we are trying to. The question is, what would be more complex than a human mind? What would we make if we could? What would such a thing do? In the story of technological evolution – or even biological evolution – what comes after minds?

The usual response to “what comes after a human mind” is better, faster, bigger minds. The same thing only more. That is probably true – we might be able to make or evolve bigger faster minds -- but as pictured they are still minds.

A more recent response, one that I have been championing, is that what comes after minds may be a biosphere of minds, an ecological network of many minds and many types of minds – sort of like rainforest of minds – that would have its own meta-level behavior and consequences. Just as a biological rainforest processes nutrients, energy, and diversity, this system of intelligences would process problems, memories, anticipations, data and knowledge. This rainforest of minds would contain all the human minds connected to it, as well as various artificial intelligences, as well as billions of semi-smart things linked up into a sprawling ecosystem of intelligences. Vegetable intelligences, insect intelligences, primate intelligences and human intelligences and maybe superhuman intelligences, all interacting in one seething network. As in any ecosystem, different agents have different capabilities and different roles. Some would cooperate, some would compete. The whole complex would be a dynamic beast, constantly in flux.

Franklin Trees 02

We could imagine the makeup of a rainforest of minds, but what would it do? Having thoughts, solving problems is what minds do. What does an ecosystem of minds do that an individual mind does not?

And what comes after it, if a biome of intelligences is next? If we let our imaginations construct the most complex entity possible, what does it do? I have found we either imagine it as a omniscient mind, or as a lesser god (almost the same thing). In a certain sense we can’t get beyond the paragon of a mind.

Cultures run on metaphors. The human mind is the current benchmark metaphor for our scientific society. Once upon a time we saw nature as an animal, then it was a clock, now we see it as a kind of mind. A mind is the metaphor for ultimate mystery, ultimate awe. It represents the standard for our attempts at creation. It is the metric for complexity. It is also our prison because we can’t see beyond it. This is the message of the Singularitans: we are incapable of imagining what comes after a human mind.

I don’t believe that, but I don’t know what the answer is either. I think it is too early in our technological development to have reached a limit of complexity. Surely in the next 100 or 500 years we’ll construct entities many thousands of times more complex than a human mind. As these ascend in prominence they will become the new metaphor.

Often the metaphor precedes the reality. We build what we can imagine. Can we imagine – now – what comes after minds?

http://www.kk.org/thetechnium/archives/2008/12/what_comes_afte.php

Portable devices give "cloud" new clout (Reuters)

BOSTON (Reuters) - Chances are the mobile phone tucked in your pocket, the lightweight laptop in your backpack, or the navigation system in your car are under a cloud.

That means much of your vital data is not just at your home, at the office or in your wallet, but can easily be accessed by hooking up to the huge memory of the Internet "cloud" with portable devices.

"There's a lot of buzz about this. Everybody wants to be connected to everything everywhere," said Laura DiDio, an analyst with Information Technology Intelligence Corp.

Cloud computing for mobile devices is taking off with the expansion of high-speed wireless networks around the world.

"You're in a car driving someplace. Not only do you want directions, you want weather reports. You want know what are the best hotels around, where are the restaurants," DiDio said.

That kind of information is available in cars -- and most other places -- via mobile phones, "netbook" laptops hooked up to wireless air cards and even high-end navigation systems.

The cloud has been around since the mid-1990s when Web pioneers such as Hotmail, Yahoo Inc and Amazon.com Inc started letting consumers manage communications, appointments and shopping via the Internet.

Expansion came after companies such as Google Inc offered free programs similar to Microsoft's Word and PowerPoint, using an ordinary PC hooked up to the Internet, or a wireless handheld computer, or phone such as Apple Inc's iPhone. Nowadays you can shoot a photo with your mobile phone and email it to a free photo-editing site such as Picnik.com. Rearden Commerce offers a "personal assistant" that manages airline bookings and restaurant reservations via Research in Motion Ltd's BlackBerry device.

NETBOOKS

The Internet cloud, which also stores photos, music and documents that could be lost if a mobile device or PC were damaged, also supports huge social networks such as Facebook and News Corp's MySpace.

"Cloud computing is going to accelerate. It's a no brainer," said Roger Entner, an analyst with Nielsen IAG. "The stronger the wireless networks become and the more ubiquitous they become, the easier it is to put things on the cloud."

PC makers including Dell Inc, Hewlett-Packard Co and Asustek Computer Inc have been successful in promoting "netbooks" -- a class of PCs introduced over the past two years that are essentially stripped down laptops, but smaller and less expensive. They are designed primarily to access the Web.

Nine of Amazon's 10 top-selling laptops are netbooks, which have little storage capacity and generally do not come with DVD drives. In the past, consumers paid a premium for smaller laptops, which often were high-end models.

"Netbooks hit an immediate sweet spot because of the price point," said Enderle Group analyst Rob Enderle.

A NEW TWIST

Back in the mid-1990s, Hotmail, now owned by Microsoft Corp, pioneered the use of a Web-based service.

Today Web-based email is one of the most widely used and easily accessible cloud services.

It works on ordinary laptops and netbooks. But it is rapidly gaining traction on "smart" mobile phones that share many functions with PCs. They include sophisticated devices such as the Blackberry and iPhone, as well as a new generation of handhelds from companies that include HTC Corp, Nokia and Palm Inc.

Analysts expect Internet companies to focus more attention on cloud-based applications for consumers in 2009.

"There's no way to stop it," said Enderle of the Enderle Group. "It's just a case of getting more and more consumer offerings based in the cloud."

http://tech.yahoo.com/news/nm/20081217/wr_nm/us_column_pluggedin_1

The internet in 2020: Mobile, ubiquitous and full of free movies

http://www.earthtimes.org/articles/show/246802,the-internet-in-2020-mobile-ubiquitous-and-full-of-free-movies.html

Saturday, December 13, 2008

Musicians protest use of songs by US jailers

GUANTANAMO BAY NAVAL BASE, Cuba – Blaring from a speaker behind a metal grate in his tiny cell in Iraq, the blistering rock from Nine Inch Nails hit Prisoner No. 200343 like a sonic bludgeon.

"Stains like the blood on your teeth," Trent Reznor snarled over distorted guitars. "Bite. Chew."

The auditory assault went on for days, then weeks, then months at the U.S. military detention center in Iraq. Twenty hours a day. AC/DC. Queen. Pantera. The prisoner, military contractor Donald Vance of Chicago, told The Associated Press he was soon suicidal.

The tactic has been common in the U.S. war on terror, with forces systematically using loud music on hundreds of detainees in Iraq, Afghanistan and Guantanamo Bay. Lt. Gen. Ricardo Sanchez, then the U.S. military commander in Iraq, authorized it on Sept. 14, 2003, "to create fear, disorient ... and prolong capture shock."

Now the detainees aren't the only ones complaining. Musicians are banding together to demand the U.S. military stop using their songs as weapons.

A campaign being launched Wednesday has brought together groups including Massive Attack and musicians such as Tom Morello, who played with Rage Against the Machine and Audioslave and is now on a solo tour. It will feature minutes of silence during concerts and festivals, said Chloe Davies of the British law group Reprieve, which represents dozens of Guantanamo Bay detainees and is organizing the campaign.

At least Vance, who says he was jailed for reporting illegal arms sales, was used to rock music. For many detainees who grew up in Afghanistan — where music was prohibited under Taliban rule — interrogations by U.S. forces marked their first exposure to the pounding rhythms, played at top volume.

The experience was overwhelming for many. Binyam Mohammed, now a prisoner at Guantanamo Bay, said men held with him at the CIA's "Dark Prison" in Afghanistan wound up screaming and smashing their heads against walls, unable to endure more.

"There was loud music, (Eminem's) 'Slim Shady' and Dr. Dre for 20 days. I heard this nonstop over and over," he told his lawyer, Clive Stafford Smith. "The CIA worked on people, including me, day and night for the months before I left. Plenty lost their minds."

The spokeswoman for Guantanamo's detention center, Navy Cmdr. Pauline Storum, wouldn't give details of when and how music has been used at the prison, but said it isn't used today. She didn't respond when asked whether music might be used in the future.

FBI agents stationed at Guantanamo Bay reported numerous instances in which music was blasted at detainees, saying they were "told such tactics were common there."

According to an FBI memo, one interrogator at Guantanamo Bay bragged he needed only four days to "break" someone by alternating 16 hours of music and lights with four hours of silence and darkness.

Ruhal Ahmed, a Briton who was captured in Afghanistan, describes excruciating sessions at Guantanamo Bay. He said his hands were shackled to his feet, which were shackled to the floor, forcing him into a painful squat for periods of up to two days.

"You're in agony," Ahmed, who was released without charge in 2004, told Reprieve. He said the agony was compounded when music was introduced, because "before you could actually concentrate on something else, try to make yourself focus on some other things in your life that you did before and take that pain away.

"It makes you feel like you are going mad," he said.

Not all of the music is hard rock. Christopher Cerf, who wrote music for "Sesame Street," said he was horrified to learn songs from the children's TV show were used in interrogations.

"I wouldn't want my music to be a party to that," he told AP.

Bob Singleton, whose song "I Love You" is beloved by legions of preschool Barney fans, wrote in a newspaper opinion column that any music can become unbearable if played loudly for long stretches.

"It's absolutely ludicrous," he wrote in the Los Angeles Times. "A song that was designed to make little children feel safe and loved was somehow going to threaten the mental state of adults and drive them to the emotional breaking point?"

Morello, of Rage Against the Machine, has been especially forceful in denouncing the practice. During a recent concert in San Francisco, he proposed taking revenge on President George W. Bush.

"I suggest that they level Guantanamo Bay, but they keep one small cell and they put Bush in there ... and they blast some Rage Against the Machine," he said to whoops and cheers.

Some musicians, however, say they're proud that their music is used in interrogations. Those include bassist Stevie Benton, whose group Drowning Pool has performed in Iraq and recorded one of the interrogators' favorites, "Bodies."

"People assume we should be offended that somebody in the military thinks our song is annoying enough that played over and over it can psychologically break someone down," he told Spin magazine. "I take it as an honor to think that perhaps our song could be used to quell another 9/11 attack or something like that."

The band's record label told AP that Benton did not want to comment further. Instead, the band issued a statement reading: "Drowning Pool is committed to supporting the lives and rights of our troops stationed around the world."

Vance, in a telephone interview from Chicago, said the tactic can make innocent men go mad. According to a lawsuit he has filed, his jailers said he was being held because his employer was suspected of selling weapons to terrorists and insurgents. The U.S. military confirms Vance was jailed but won't elaborate because of the lawsuit.

He said he was locked in an overcooled 9-foot-by-9-foot cell that had a speaker with a metal grate over it. Two large speakers stood in the hallway outside. The music was almost constant, mostly hard rock, he said.

"There was a lot of Nine Inch Nails, including 'March of the Pigs,'" he said. "I couldn't tell you how many times I heard Queen's 'We Will Rock You.'"

He wore only a jumpsuit and flip-flops and had no protection from the cold.

"I had no blanket or sheet. If I had, I would probably have tried suicide," he said. "I got to a few points toward the end where I thought, `How can I do this?' Actively plotting, `How can I get away with it so they don't stop it?'"

Asked to describe the experience, Vance said: "It sort of removes you from you. You can no longer formulate your own thoughts when you're in an environment like that."

He was released after 97 days. Two years later, he says, "I keep my home very quieThursday, November 6, 2008

Kevin Kelly's Latest

The Ninth Transition of Evolution

Many folks responded to my inquiry about evidence of a global super-organism. Among the most detailed and well-considered was Nova Spivack's long essay posted on Twine. Twine is a crowd-sourced aggregator of knowledge, superficially like the shared bookmarks of Delicious, or Stumbleupon, but with more room for comments and potentially more connections between posts. Nova founded Twine. I've been trying it out. One idea Nova mentioned in his essay I think is worth developing. He suggest three stages of development for collective action.

1. Crowds. Crowds are collectives in which the individuals are not aware of the whole and in which there is no unified sense of identity or purpose. Nevertheless crowds do intelligent things. Consider for example, schools of fish, or flocks of birds. There is no single leader, yet the individuals, by adapting to what their nearby neighbors are doing, behave collectively as a single entity of sorts.

2. Groups. Groups are the next step up from crowds. Groups have some form of structure, which usually includes a system for command and control. They are more organized. Groups are capable of much more directed and intelligent behaviors. Families, cities, workgroups, sports teams, armies, universities, corporations, and nations are examples of groups. They may have a primitive sense of identity and self, and on the basis of that, they are capable of planning and acting in a more coordinated fashion.

3. Meta-Individuals. The highest level of collective intelligence is the meta-individual. This emerges when what was once a crowd of separate individuals, evolves to become a new individual in its own right, and is facilitated by the formation of a sophisticated meta-level self-construct for the collective. This new whole resembles the parts, but transcends their abilities. High level collective consciousness requires a sophisticated collective self construct to serve as a catalyst.

What Nova Spivack suggests here is that the path from random population to meta-individual is a path of increasing structure. The parts are more tightly bound in relationships, and as they gain in interdependence, the whole advances to the next phase. I think a close study of how meta-individuals, or super-organisms (which I think are the same thing), form would reveal that there they be more than 3 stages, or perhaps more than one pathway. I think the main research hurdle in describing this development is to specify what exactly is being structured. My guess is that it is the informational nature of the organism.

In the landmark book "The Major Transitions in Evolution" the authors Smith and Szathmary lay out the eight major phases of development in biological evolution so far, and perhaps not remarkably, these eight stages resemble the path from random population to meta-individuals at each level. In other words, Smith and Szathmary say that evolution is the continued, graduated progression in which smaller units form larger, higher level units, and then those new meta-individuals start to form a new group, where each meta-individual is a mere individual. Thus life has formed a super-organism structure eight times so far. These eight levels or stages of super-organization are:

From replicating molecules to bounded population of molecules

From populations of replicators to chromosomes

From RNA chromosomes to DNA genes and proteins

From Prokaryotes to Eukaryotes

From Asexual clones to sexual populations

From single cell protists to multicelluar organisms

From solitary individuals to colonies

From animal societies to language-based human societies

As the Wikipedia entry on the theory states, Smith and Szathmary extract out several principles they find common to these eight transitions.

1. Smaller entities have often come about together to form larger entities. e.g. Chromosomes, eukaryotes, sex multicellular colonies.

2. Smaller entities often become differentiated as part of a larger entity. e.g. DNA & protein, organelles, anisogamy, tissues, castes

3. The smaller entities are often unable to replicate in the absence of the larger entity. e.g. Organelles, tissues, castes

4. The smaller entities can sometimes disrupt the development of the larger entity e.g. Meiotic drive (selfish non-Mendelian genes), parthenogenesis, cancers, coup d’état

5. New ways of transmitting information have arisen.e.g. DNA-protein, cell heredity, epigenesis, universal grammar.

I believe the last point is the cause and not a symptom of the transition.

Another way to view these transitions is as increased levels or varieties of cooperation. At each stage there is a tension between the selfish needs of the individual and the needs of the collective. Robert Wright, writing in "Nonzero" argues that the evolution of humanity is one long progression of increasing cooperation, starting from the first cell of life, where both "sides" win. Rather than having to choose the interests of the individual or the meta-individual collective in a zero-sum game, evolution innovates ways to structure cooperation so that both the individual and the group benefit in a non-zero-sum win/win. John Stewart, author of "Evolution's Arrow", argues that the direction of evolution is to extend cooperation over large spans of time and space. In the beginning atoms "cooperated" to form molecules, than replicators, then DNA, and so on, where greater amounts of material are interdependent for greater lengths of time. He suggests we can see where evolution is going by imagining a next phase which will increases the span of cooperation further.

That of course, would be the ninth transition,

From human society to a global super-organism containing both humans and their machines.

For this to happen, humans would have to benefit directly as well as the One Machine. (Nova suggests we abbreviate the One Machines as OM, pronounced Om, as in the mantra. That works for me.) There has to be a non-zero sum benefit for individual humans and for the larger collective of the OM. We see such benefits in the use of the web. In fact the web is ruled by network effects, which is another way of stating the increase benefits accrue to a collective (network) with the participation of additional individuals, who join because they also get direct benefit. Humans use Google because they benefit greatly, and their use makes Google better.

At every stage of evolutionary development we see

1. Increased cooperation among parts, benefiting both parts and the whole.

2. Increased span of interdependence in space and time.

3. Increase complexity of informational flow.

4. Emergence of a new level of control.

For the ninth transition in life's evolution -- the transition to a planetary level organization of humans and machines -- we should expect to see:

1. Increased cooperation among humans, benefiting both humans and the OM.

2. Increased span of interdependence. Planetary scale, things happening and enduring longer or quicker than before.

3. Increase complexity of informational flow. New ways of connecting, organizing, relating not possible before.

4. Emergence of a new level of control. An innovation (like DNA, or spinal cord, government) that takes control of functions in order to benefit constituents non-zero-ly.

http://www.kk.org/thetechnium/archives/2008/11/the_ninth_trans.php

Wednesday, November 5, 2008

Wired.com Photo Contest: Music

Your assignment for this photo contest is both simple and difficult: music. Move beyond the band and concert cliches and show us what music means to you.

Use the Reddit widget below to submit your best music photo and vote for your favorite among the other submissions. The 10 highest-ranked photos will appear in a gallery on the Wired.com homepage. Show us your grandpa's old dusty stacks of shellac, the piano in the backyard overgrown with moss and ivy, an exotic minstrel in the heart of a Mediterranean bazaar. Deliver us to psychedelic synesthesia by making us hear your vivid photos with our eyes.

The photo must be your own, and by submitting it you are giving us permission to use it on Wired.com and in Wired magazine. Please submit images that are relatively large, the ideal size being 800 to 1200 pixels or larger on the longest side. Please include a description of your photo, which may include exposure information, equipment used, etc.

We don't host the photos, so you'll have to upload it somewhere else and submit a link to it. If you're using Flickr, Picasa or another photo-sharing site to host your image, please provide a link to the image directly and not just to the photo page where it's displayed. Using an online photo service that requires that you log in will not work. If your photo doesn't show up, it's because the URL you have entered is incorrect. Check it and make sure it ends with the image file name (XXXXXX.jpg).

Please bookmark this page and check back periodically over the next two weeks to vote on new submissions!

Follow the link to see the photos:

http://www.wired.com/culture/art/news/2008/11/submissions_musicSaturday, November 1, 2008

Evidence of a Global SuperOrganism

By Kevin Kelly

http://www.kk.org/thetechnium/I am not the first, nor the only one, to believe a superorganism is emerging from the cloak of wires, radio waves, and electronic nodes wrapping the surface of our planet. No one can dispute the scale or reality of this vast connectivity. What's uncertain is, what is it? Is this global web of computers, servers and trunk lines a mere mechanical circuit, a very large tool, or does it reach a threshold where something, well, different happens?

So far the proposition that a global superorganism is forming along the internet power lines has been treated as a lyrical metaphor at best, and as a mystical illusion at worst. I've decided to treat the idea of a global superorganism seriously, and to see if I could muster a falsifiable claim and evidence for its emergence.

My hypothesis is this: The rapidly increasing sum of all computational devices in the world connected online, including wirelessly, forms a superorganism of computation with its own emergent behaviors.

Superorganisms are a different type of organism. Large things are made from smaller things. Big machines are made from small parts, and visible living organisms from invisible cells. But these parts don't usually stand on their own. In a slightly fractal recursion, the parts of a superorganism lead fairly autonomous existences on their own. A superorganism such as an insect or mole rat colony contains many sub-individuals. These individual organisms eat, move about, get things done on their own. From most perspectives they appear complete. But in the case of the social insects and the naked mole rat these autonomous sub individuals need the super colony to reproduce themselves. In this way reproduction is a phenomenon that occurs at the level of the superorganism.

I define the One Machine as the emerging superorganism of computers. It is a megasupercomputer composed of billions of sub computers. The sub computers can compute individually on their own, and from most perspectives these units are distinct complete pieces of gear. But there is an emerging smartness in their collective that is smarter than any individual computer. We could say learning (or smartness) occurs at the level of the superorganism.

Supercomputers built from subcomputers were invented 50 years ago. Back then clusters of tightly integrated specialized computer chips in close proximity were designed to work on one kind of task, such as simulations. This was known as cluster computing. In recent years, we've created supercomputers composed of loosely integrated individual computers not centralized in one building, but geographically distributed over continents and designed to be versatile and general purpose. This later supercomputer is called grid computing because the computation is served up as a utility to be delivered anywhere on the grid, like electricity. It is also called cloud computing because the tally of the exact component machines is dynamic and amorphous - like a cloud. The actual contours of the grid or cloud can change by the minute as machines come on or off line.

There are many cloud computers at this time. Amazon is credited with building one of the first commercial cloud computers. Google probably has the largest cloud computer in operation. According to Jeff Dean one of their infrastructure engineers, Google is hoping to scale up their cloud computer to encompass 10 million processors in 1,000 locations.

Each of these processors is an off-the-shelf PC chip that is nearly identical to the ones that power your laptop. A few years ago computer scientists realized that it did not pay to make specialized chips for a supercomputer. It was far more cost effective to just gang up rows and rows of cheap generic personal computer chips, and route around them when they fail. The data centers for cloud computers are now filled with racks and racks of the most mass-produced chips on the planet. An unexpected bonus of this strategy is that their high production volume means bugs are minimized and so the generic chips are more reliable than any custom chip they could have designed.

If the cloud is a vast array of personal computer processors, then why not add your own laptop or desktop computer to it? It in a certain way it already is. Whenever you are online, whenever you click on a link, or create a link, your processor is participating in the yet larger cloud, the cloud of all computer chips online. I call this cloud the One Machine because in many ways it acts as one supermegacomputer.

The majority of the content of the web is created within this one virtual computer. Links are programmed, clicks are chosen, files are moved and code is installed from the dispersed, extended cloud created by consumers and enterprise - the tons of smart phones, Macbooks, Blackberries, and workstations we work in front of. While the business of moving bits and storing their history all happens deep in the tombs of server farms, the cloud's interaction with the real world takes place in the extremely distributed field of laptop, hand-held and desktop devices. Unlike servers these outer devices have output screens, and eyes, skin, ears in the form of cameras, touch pads, and microphones. We might say the cloud is embodied primarily by these computer chips in parts only loosely joined to grid.

This megasupercomputer is the Cloud of all clouds, the largest possible inclusion of communicating chips. It is a vast machine of extraordinary dimensions. It is comprised of quadrillion chips, and consumes 5% of the planet's electricity. It is not owned by any one corporation or nation (yet), nor is it really governed by humans at all. Several corporations run the larger sub clouds, and one of them, Google, dominates the user interface to the One Machine at the moment.

None of this is controversial. Seen from an abstract level there surely must be a very large collective virtual machine. But that is not what most people think of when they hear the term a "global superorganism." That phrase suggests the sustained integrity of a living organism, or a defensible and defended boundary, or maybe a sense of self, or even conscious intelligence.

Sadly, there is no ironclad definition for some of the terms we most care about, such as life, mind, intelligence and consciousness. Each of these terms has a long list of traits often but not always associated with them. Whenever these traits are cast into a qualifying definition, we can easily find troublesome exceptions. For instance, if reproduction is needed for the definition of life, what about mules, which are sterile? Mules are obviously alive. Intelligence is a notoriously slippery threshold, and consciousness more so. The logical answer is that all these phenomenon are continuums. Some things are smarter, more alive, or less conscious than others. The thresholds for life, intelligence, and consciousness are gradients, rather than off-on binary.

With that perspective a useful way to tackle the question of whether a planetary superorganism is emerging is to offer a gradient of four assertions.

There exists on this planet:

- IÂ Â A manufactured superorganism

- IIÂ Â An autonomous superorganism

- IIIÂ An autonomous smart superorganism

- IVÂ An autonomous conscious superorganism

These four could be thought of as an escalating set of definitions. At the bottom we start with the almost trivial observation that we have constructed a globally distributed cluster of machines that can exhibit large-scale behavior. Call this the weak form of the claim. Next come the two intermediate levels, which are uncertain and vexing (and therefore probably the most productive to explore). Then we end up at the top with the extreme assertion of "Oh my God, it's thinking!"Â That's the strong form of the superorganism. Very few people would deny the weak claim and very few affirm the strong.

My claim is that in addition to these four strengths of definitions, the four levels are developmental stages through which the One Machine progresses. It starts out forming a plain superorganism, than becomes autonomous, then smart, then conscious. The phases are soft, feathered, and blurred. My hunch is that the One Machine has advanced through levels I and II in the past decades and is presently entering level III. If that is true we should find initial evidence of an autonomous smart (but not conscious) computational superorganism operating today.

But let's start at the beginning.

LEVEL I

A manufactured superorganism

By definition, organisms and superorganisms have boundaries. An outside and inside. The boundary of the One Machine is clear: if a device is on the internet, it is inside. "On" means it is communicating with the other inside parts. Even though some components are "on" in terms of consuming power, they may be on (communicating) for only brief periods. Your laptop may be useful to you on a 5-hour plane ride, but it may be technically "on" the One Machine only when you land and it finds a wifi connection. An unconnected TV is not part of the superorganism; a connected TV is. Most of the time the embedded chip in your car is off the grid, but on the few occasions when its contents are downloaded for diagnostic purposes, it becomes part of the greater cloud. The dimensions of this network are measurable and finite, although variable.

The One Machine consumes electricity to produce structured information. Like other organisms, it is growing. Its size is increasing rapidly, close to 66% per year, which is basically the rate of Moore's Law. Every year it consumes more power, more material, more money, more information, and more of our attention. And each year it produces more structured information, more wealth, and more interest.

On average the cells of biological organisms have a resting metabolism rate of between 1- 10 watts per kilogram. Based on research by Jonathan Koomey a UC Berkeley, the most efficient common data servers in 2005 (by IBM and Sun) have a metabolism rate of 11 watts per kilogram. Currently the other parts of the Machine (the electric grid itself, the telephone system) may not be as efficient, but I haven't found any data on it yet. Energy efficiency is a huge issue for engineers. As the size of the One Machine scales up the metabolism rate for the whole will probably drop (although the total amount of energy consumed rises).

The span of the Machine is roughly the size of the surface of the earth. Some portion of it floats a few hundred miles above in orbit, but at the scale of the planet, satellites, cell towers and servers farms form the same thin layer. Activity in one part can be sensed across the entire organism; it forms a unified whole.

Within a hive honeybees are incapable of thermoregulation. The hive superorganism must regulate the bee's working temperature. It does this by collectively fanning thousands of tiny bee wings, which moves hot air out of the colony. Individual computers are incapable of governing the flow of bits between themselves in the One Machine.

Prediction: the One Machine will continue to grow. We should see how data flows around this whole machine in response to daily usage patterns (see Follow the Moon). The metabolism rate of the whole should approach that of a living organism.

LEVEL II

An autonomous superorganism

Autonomy is a problematic concept. There are many who believe that no non-living entity can truly be said to be autonomous. We have plenty of examples of partial autonomy in created things. Autonomous airplane drones: they steer themselves, but they don't repair themselves. We have self-repairing networks that don't reproduce themselves. We have self-reproducing computer viruses, but they don't have a metabolism. All these inventions require human help for at least aspect of their survival. To date we have not conjured up a fully human-free sustainable synthetic artifact of any type.

But autonomy too is a continuum. Partial autonomy is often all we need - or want. We'll be happy with miniature autonomous cleaning bots that requires our help, and approval, to reproduce. A global superorganism doesn't need to be fully human-free for us to sense its autonomy. We would acknowledge a degree of autonomy if an entity displayed any of these traits: self-repair, self-defense, self-maintenance (securing energy, disposing waste), self-control of goals, self-improvement. The common element in all these characteristics is of course the emergence of a self at the level of the superorganism.

In the case of the One Machine we should look for evidence of self-governance at the level of the greater cloud rather than at the component chip level. A very common cloud-level phenomenon is a DDoS attack. In a Distributed Denial of Service (DDoS) attack a vast hidden network of computers under the control of a master computer are awakened from their ordinary tasks and secretly assigned to "ping" (call) a particular target computer in mass in order to overwhelm it and take it offline. Some of these networks (called bot nets) may reach a million unsuspecting computers, so the effect of this distributed attack is quite substantial. From the individual level it is hard to detect the net, to pin down its command, and to stop it. DDoS attacks are so massive that they can disrupt traffic flows outside of the targeted routers - a consequence we might expect from an superorganism level event.

I don't think we can make too much of it yet, but researchers such as Reginald Smith have noticed there was a profound change in the nature of traffic on the communications network in the last few decades as it shifted from chiefly voice to a mixture of data, voice, and everything else. Voice traffic during the Bell/AT&T era obeyed a pattern known as Poisson distribution, sort of like a Gaussian bell curve. But ever since data from diverse components and web pages became the majority of bits on the lines, the traffic on the internet has been following a scale-invariant, or fractal, or power-law pattern. Here the distribution of very large and very small packets fall out onto a curve familiarly recognized as the long-tail curve. The scale-invariant, or long tail traffic patterns of the recent internet has meant engineers needed to devise a whole set of new algorithms for shaping the teletraffic. This phase change toward scale-invariant traffic patterns may be evidence for an elevated degree of autonomy. Other researchers have detected sensitivity to initial conditions, "strange attractor" patterns and stable periodic orbits in the self-similar nature of traffic - all indications of self-governing systems. Scale-free distributions can be understood as a result of internal feedback, usually brought about by loose interdependence between the units. Feedback loops constrain the actions of the bits by other bits. For instance the Ethernet collision detection management algorithm (CSMA/CD) employs feedback loops to manage congestion by backing off collisions in response to other traffic. The foundational TCP/IP system underpinning internet traffic therefore "behaves in part as a massive closed loop feedback system." While the scale free pattern of internet traffic is indisputable and verified by many studies, there is dispute whether it means the system itself is tending to optimize traffic efficiency - but some believe it is.

Unsurprisingly the vast flows of bits in the global internet exhibit periodic rhythms. Most of these are diurnal, and resemble a heartbeat. But perturbations of internet bit flows caused by massive traffic congestion can also be seen. Analysis of these "abnormal" events show great similarity to abnormal heart beats. They deviate from an "at rest" rhythms the same way that fluctuations of a diseased heart deviated from a healthy heart beat.

Prediction: The One Machine has a low order of autonomy at present. If the superorganism hypothesis is correct in the next decade we should detect increased scale-invariant phenomenon, more cases of stabilizing feedback loops, and a more autonomous traffic management system.

LEVEL III

An autonomous smart superorganism

Organisms can be smart without being conscious. A rat is smart, but we presume, without much self-awareness. If the One Machine was as unconsciously smart as a rat, we would expect it to follow the strategies a clever animal would pursue. It would seek sources of energy, it would gather as many other resources it could find, maybe even hoard them. It would look for safe, secure shelter. It would steal anything it needed to grow. It would fend off attempts to kill it. It would resist parasites, but not bother to eliminate them if they caused no mortal harm. It would learn and get smarter over time.

Google and Amazon, two clouds of distributed computers, are getting smarter. Google has learned to spell. By watching the patterns of correct-spelling humans online it has become a good enough speller that it now corrects bad-spelling humans. Google is learning dozens of languages, and is constantly getting better at translating from one language to another. It is learning how to perceive the objects in a photo. And of course it is constantly getting better at answering everyday questions. In much the same manner Amazon has learned to use the collective behavior of humans to anticipate their reading and buying habits. It is far smarter than a rat in this department.

Cloud computers such as Google and Amazon form the learning center for the smart superorganism. Let's call this organ el Googazon, or el Goog for short. El Goog encompasses more than the functions the company Google and includes all the functions provided by Yahoo, Amazon, Microsoft online and other cloud-based services. This loosely defined cloud behaves like an animal.

El Goog seeks sources of energy. It is building power plants around the world at strategic points of cheap energy. It is using its own smart web to find yet cheaper energy places and to plan future power plants. El Goog is sucking in the smartest humans on earth to work for it, to help make it smarter. The smarter it gets, the more smart people, and smarter people, want to work for it. El Goog ropes in money. Money is its higher metabolism. It takes the money of investors to create technology which attracts human attention (ads), which in turns creates more money (profits), which attracts more investments. The smarter it makes itself, the more attention and money will flow to it.

Manufactured intelligence is a new commodity in the world. Until now all useable intelligence came in the package of humans - and all their troubles. El Goog and the One Machine offer intelligence without human troubles. In the beginning this intelligence is transhuman rather than non-human intelligence. It is the smartness derived from the wisdom of human crowds, but as it continues to develop this smartness transcends a human type of thinking. Humans will eagerly pay for El Goog intelligence. It is a different kind of intelligence. It is not artificial - i.e. a mechanical -- because it is extracted from billions of humans working within the One Machine. It is a hybrid intelligence, half humanity, half computer chip. Therefore it is probably more useful to us. We don't know what the limits are to its value. How much would you pay for a portable genius who knew all there was known?

With the snowballing wealth from this fiercely desirable intelligence, el Goog builds a robust network that cannot be unplugged. It uses its distributed intelligence to devise more efficient energy technologies, more wealth producing inventions, and more favorable human laws for its continued prosperity. El Goog is developing an immune system to restrict the damage from viruses, worms and bot storms to the edges of its perimeter. These parasites plague humans but they won't affect el Goog's core functions. While El Goog is constantly seeking chips to occupy, energy to burn, wires to fill, radio waves to ride, what it wants and needs most is money. So one test of its success is when El Goog becomes our bank. Not only will all data flow through it, but all money as well.

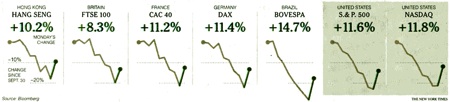

This New York Times chart of the October 2008 financial market crash shows how global markets were synchronized, as if they were one organism responding to a signal.

How far away is this? "Closer than you think" say the actual CEOs of Google, the company. I like the way George Dyson puts it:

If you build a machine that makes connections between everything, accumulates all the data in the world, and you then harness all available minds to collectively teach it where the meaningful connections and meaningful data are (Who is searching Whom?) while implementing deceptively simple algorithms that reinforce meaningful connections while physically moving, optimizing and replicating the data structures accordingly - if you do all this you will, from highly economical (yes, profitable) position arrive at a result - an intelligence -- that is "not as far off as people think."

To accomplish all this el Goog need not be conscious, just smart.

Prediction: The mega-cloud will learn more languages, answer more of our questions, anticipate more of our actions, process more of our money, create more wealth, and become harder to turn off.

LEVEL IV

An autonomous conscious superorganism

How would we know if there was an autonomous conscious superorganism? We would need a Turing Test for a global AI. But the Turing Test is flawed for this search because it is meant to detect human-like intelligence, and if a consciousness emerged at the scale of a global megacomputer, its intelligence would unlikely to be anything human-like. We might need to turn to SETI, the search for extraterrestrial intelligence (ETI), for guidance. By definition, it is a test for non-human intelligence. We would have to turn the search from the stars to our own planet, from an ETI, to an ii - an internet intelligence. I call this proposed systematic program Sii, the Search for Internet Intelligence.

This search assumes the intelligence we are looking for is not human-like. It may operate at frequencies alien to our minds. Remember the tree-ish Ents in Lord of the Rings? It took them hours just to say hello. Or the gas cloud intelligence in Fred Hoyle's "The Black Cloud". A global conscious superorganism might have "thoughts" at such a high level, or low frequency, that we might be unable to detect it. Sii would require a very broad sensitivity to intelligence.

But as Allen Tough, an ETI theorist told me, "Unfortunately, radio and optical SETI astronomers pay remarkably little attention to intelligence. Their attention is focused on the search for anomalous radio waves and rapidly pulsed laser signals from outer space. They do not think much about the intelligence that would produce those signals." The cloud computer a global superorganism swims in is nothing but unnatural waves and non-random signals, so the current set of SETI tools and techniques won't help in a Sii.

For instance, in 2002 researchers analyzed some 300 million packets on the internet to classify their origins. They were particularly interested in the very small percentage of packets that passed through malformed. Packets (the message's envelope) are malformed by either malicious hackers to crash computers or by various bugs in the system. Turns out some 5% of all malformed packets examined by the study had unknown origins - neither malicious origins nor bugs. The researchers shrug these off. The unreadable packets are simply labeled "unknown." Maybe they were hatched by hackers with goals unknown to the researches, or by bugs not found. But a malformed packet could also be an emergent signal. A self-created packet. Almost by definition, these will not be tracked, or monitored, and when seen shrugged off as "unknown."

There are scads of science fiction scenarios for the first contact (awareness) of an emerging planetary AI. Allen Tough suggested two others:

One strategy is to assume that Internet Intelligence might have its own web page in which it explains how it came into being, what it is doing now, and its plans and hopes for the future. Another strategy is to post an invitation to ii (just as we have posted an invitation to ETI). Invite it to reveal itself, to dialogue, to join with us in mutually beneficial projects. It is possible, of course, that Internet Intelligence has made a firm decision not to reveal itself, but it is also possible that it is undecided and our invitation will tip the balance.

The main problem with these "tests" for a conscious ii superorganism is that they don't seem like the place to begin. I doubt the first debut act of consciousness is to post its biography, or to respond to an evite. The course of our own awakening consciousness when we were children is probably more fruitful. A standard test for self-awareness in a baby or adult primate is to reflect its image back in a mirror. When it can recognize its mirrored behavior as its own it has a developed sense of self. What would the equivalent mirror be for an ii?

But even before passing a mirror test, an intelligent consciousness would acquire a representation of itself, or more accurately a representation of a self. So one indication of a conscious ii would be the detection of a "map" of itself. Not a centrally located visible chart, but an articulation of its being. A "picture" of itself. What was inside and what was outside. It would have to be a real time atlas, probably distributed, of what it was. Part inventory, part operating manual, part self-portrait, it would act like an internal mirror. It would pay attention to this map. One test would be to disturb the internal self-portrait to see if the rest of the organism was disturbed. It is important to note that there need be no self-awareness of this self map. It would be like asking a baby to describe itself.

Long before a conscious global AI tries to hide itself, or take over the world, or begin to manipulate the stock market, or blackmail hackers to eliminate any competing ii's (see the science fiction novel "Daemon"), it will be a fragile baby of a superorganism. It's intelligence and consciousness will only be a glimmer, even if we know how to measure and detect it. Imagine if we were Martians and didn't know whether human babies were conscious or not. How old would they be before we were utterly convinced they were conscious beings? Probably long after they were.

Prediction: The cloud will develop an active and controlling map of itself (which includes a recursive map in the map), and a governing sense of "otherness."

What's so important about superorganism?

We don't have very scientific tests for general intelligence in animals or humans. We have some tests for a few very narrow tasks, but we have no reliable measurements for grades or varieties of intelligence beyond the range of normal IQ tests. What difference does it make whether we measure a global organism? Why bother?

Measuring the degree of self-organization of the One Machine is important for these reasons:

- 1) The more we are aware of how the big cloud of this Machine behaves, the more useful it will be to us. If it adapts like an organism, then it is essential to know this. If it can self-repair, that is vital knowledge. If it is smart, figuring the precise way it is smart will help us to be smarter.

- 2) In general, a more self-organized machine is more useful. We can engineer aspects of the machine to be more ready to self-organize. We can favor improvements that enable self-organization. We can assist its development by being aware of its growth and opening up possibilities in its development.

- 3) There are many ways to be smart and powerful. We have no clue to the range of possibilities a superorganism this big, made out of a billion small chips, might take, but we know the number of possible forms is more than one. By being aware early in the process we can shape the kind of self-organization and intelligence a global superorganism could have.

As I said, I am not the first nor only person to consider all this. In 2007 Philip Tetlow published an entire book, The Web's Awake, exploring this concept. He lays out many analogs between living systems and the web, but of course they are only parallels, not proof.

I welcome suggestions, additions, corrections, and constructive comments. And, of course, if el Goog has anything to say, just go ahead and send me an email.

What kind of evidence would you need to be persuaded we have Level I, II, III, or IV?

Monday, October 27, 2008

What does it all mean?

This video was produced by the Sony Corp. for its annual Executive meeting held in June, 2008.

Saturday, October 18, 2008

Sunday, October 12, 2008

Steven Johnson

I wanted to alert my friends at Drexel University and the surrounding Philadelphia area that one of my favorite writers/thinkers will be speaking at Bossone Auditorium on October 21.

http://upcoming.yahoo.com/event/599531/

Now the video above is obviously dated but it is a good introduction to Steven's kind of thinking. He is a champion of a science called Emergence. Basically Emergence deals with things like ant colonies, city development, the internet, and human consciousness. This may seem like a radically diverse array of topics (and it is) but they are connected by a common theme. Each of these cases use a bunch of little "stupid" things and connects them into a "smart" thing. It is nature's practical application of a whole that is greater than the sum of its parts.

What does this mean? Well, think for a second about an ant colony. It has huge numbers of individual ants. But none of those ants have the ability to govern the ant colony and lead complicated projects. Not even the ant queen. But somehow, in spite of this lack of leadership from the top, ant communities are able to accomplish amazing and unbelievable things by this kind of bottom up governance. But how?

Well, Steven wrote a really interesting book about this very thing. It is called "Emergence" and I would recommend it to anyone who takes an interest in how things work.

Also, there is an amazing podcast about this science of Emergence right here:

http://www.wnyc.org/shows/radiolab/episodes/2005/02/18

Thursday, September 25, 2008

What in the world is going on? I am blown away by the drastic nature of everything going on this week! Today was landmark in so many ways. The economy is obviously the star of the show. As we all try to wrap our minds around this massive bailout proposal, I am interested in hearing your thoughts. It seems like a radical shift is taking place and no one really understands the ramifications yet. Is this a good thing? Is this undermining the very principles of free market economies?

It will be awesome to see what the candidates have to say about all of this!

We are going to host a Debate viewing party at Bean There Cafe on Friday night. We will even play a few acoustic songs before the debates begin. Look out for an official announcement tomorrow.

Tuesday, September 16, 2008

Saturday, September 13, 2008

"Unchristian."

At the conference this past week, I had the opportunity to talk with author David Kinnaman about his book, "Unchristian." I had read this book earlier in the year after Isaac Slade had recommended it to me. When we were on tour with the Fray, Isaac and I had developed a really cool friendship and many great conversations. He was surprisingly candid about his faith and his passion for God. It was really refreshing to hear him speak with open conviction about his love for God and to see him live that out on a daily basis.

So, one day he was telling me about this book and why it was important. I finally got around to reading it and he was right. It is important. It is really important.

Here is a brief interview with David that describes what the book is about. Have any of you read it?

Monday, September 8, 2008

All eyes on collider as it comes to life (Boston Globe : 09-08-08)

The world's biggest, most highly-anticipated physics experiment comes online this week, as the first beam of particles begins to circulate around a 17-mile underground racetrack that lies beneath France and Switzerland.

The $9 billion Large Hadron Collider, 20 years in the making, represents the work of at least 7,000 scientists from 60 countries, including a contingent from the Boston area that spent years, or entire careers, working on this project.

Their excitement is testimony to the importance of the mission: to recreate in an underground tunnel the conditions of the early universe, just a trillionth of a second after the Big Bang. From that, they hope to fill in gaps in physics knowledge, search for hidden dimensions, and understand why particles have mass.

The collider soaks up superlatives like no other science project. But no whiz-bang insights are expected immediately, or even this year. The inaugural beam is just the critical first step in what will be years of research. So the revving up this week of the world's largest particle accelerator will be punctuated with emotion, not eureka. "It's the culmination of my career," said James Bensinger, 67, a physicist from Brandeis University who has been working on the project for 15 years. "It will certainly outlive my scientific life; it very well may outlive me, period. It's not that unusual in the human experience. The people who built cathedrals - often times their sons saw it completed. But still, they thought it was something much bigger than they were and kept it going."

The Large Hadron Collider is operated by the European Organization for Nuclear Research, also known by its French acronym, CERN. The circular underground tunnel, in which the particle beams ramp up to 99.99 percent of the speed of the light, lies more than 300 feet below the earth, at the foot of the Jura Mountains. The accelerator dwarfs its closest cousin, the Tevatron at Fermi National Accelerator Laboratory in Batavia, Ill., and because it can reach higher energies, it will be used to search for evidence of some of the most evanescent particles.

One of physicists' most vexing unanswered questions is: What are the origins of mass in the universe? The answer may lie in a theoretical particle called the Higgs boson first predicted in 1964, that has been bugging scientists for decades. The elusive particle, also called the "God particle," was inserted into scientific theory to make physicists' models work, but it has never been seen.

"For my entire career, since I got my PhD at Cornell in the early 70s, there's been something called the standard model that has explained all the phenomena that has been observed in high energy physics basically through my entire my career," said Frank Taylor, an MIT senior research scientist. "But there's one part that's missing, so in a sense the program would hopefully be the fulfillment of this one missing piece of the exploration."Taylor, Bensinger, and other Boston-area scientists collaborated on building a detector that will be used within the collider to detect muons, particles that are signatures of the elusive particles expected to be created in the collisions. Scientists from Boston University, Brandeis University, Harvard University, MIT, Northeastern University, Tufts University, and the University of Massachusetts at Amherst worked on various research programs within the Large Hadron Collider.

Fifteen years seems like a long time to wait to build a single experiment, especially when scientists may have to wait additional months and years before the scientific breakthroughs start to percolate out. But many of the Boston-area physicists who worked on building a detector already had their patience tested before; they were alums from another major scientific experiment that was never built, called the Texas Superconducting Super Collider. Some had already devoted years to that project, estimated to cost $11 billion, when it was halted by Congress in 1993.

Back then, "There was a lot of soul searching, and a lot of saying, 'What do we do now?' " said George Brandenburg, a senior research fellow at Harvard University. But since then, Brandenburg and colleagues have been able to do in Europe the same work they once intended to do in Texas.

The United States is heavily involved in the Large Hadron Collider, paying $531 million to support it, but the new project does shift the center of such physics research to Europe. Still, "as a scientist, how can you be unhappy if the project is being done and you can be a part of it?" Brandenburg said.

Over the years, some physicists have shifted their research focus to different areas, yet they remain excited about the launch of the Large Hadron Collider.

"This could be an epic program, honestly," said Tony Mann, a physicist from Tufts University who worked on the detector, but has now resumed work on another area of particle physics. "This is potentially the most exciting experimental endeavor ever launched. There's a part of me that looks at that with curiosity, and a little bit of envy. I hate to miss a great party."

What scientists discover at the Large Hadron Collider will also help set the path for the next big experiment, the International Linear Collider, which will smash together another family of particles, called leptons.

But there's always the possibility that against all expectations, this massive game-changing experiment will come up empty-handed. It could take years to find out if it represents the dawn of a new era of physics - or not.

"This could be the last experiment ever done, or we could discover all kinds of extraordinary, exciting things," said Steve Ahlen, a BU physicist working on the collider. "I'm just ecstatic this thing got built."

Carolyn Y. Johnson can be reached at cjohnson@globe.com.http://www.boston.com/news/science/articles/2008/09/08/all_eyes_on_collider_as_it_comes_to_life/?page=2

Tomorrow morning I will be flying out to Nashville for a couple of days to attend the Sapere Artist Retreat. This should be a pretty enjoyable few days and I will fill you in on all the stories as they happen. I have been told that the format will include panel discussion, Q&A, conversation about art and faith, and music. Here is an abbreviated list of speakers/panelists (and chef...sweet!)

Guest speakers include:

• David Kinnaman, author of the best-selling book, unChristian: What

a New Generation Really Thinks About Christianity

• Anna Broadway, popular blogger and author of Sexless In The City:

A Memoir of Reluctant Chastity

• David Dark, author of Everyday Apocalypse: The Sacred Revealed in

Radiohead, the Simpsons, and Other Pop Culture Icons

• Mark Scandrette, poet and author of Soul Graffiti: Making A Life

in the Way of Jesus

• Charlie Peacock, author of New Way To Be Human

A Special Surprise Guest is scheduled for Tuesday afternoon!

Amazing food will be prepared by California guest chef Kathi Riley-

Smith, including a light breakfast at registration on Tuesday morning

at 9AM, so come hungry! Kathi's food-cred is on stun (Chez Panisse,

Zuni Cafe). She was nominated by “Food and Wine Magazine” as one of

the 25 Hot New American Chefs.

Thursday, September 4, 2008

Saturday, August 30, 2008

I said at the beginning that this is a puzzle. I am not sure what the finished picture is supposed to look like yet. All I know is that these are the pieces that landed in my lap and let me know that I was part of something bigger than myself and my own little world. This is the mystery I have been given to solve. Would you please help me?

Juan Maldacena

The Big Bang Machine

A Long Island particle smasher re-creates the moment of creation.

by Tim Folgerpublished online February 27, 2007

"Here is where the action takes place. This is where we effectively try to turn the clock back 14 billion years. Right above your head, about 13½ feet in the air."

Looking up, I try to imagine the events Tim Hallman is describing—atoms of gold colliding at 99.99 percent the speed of light; temperatures instantly soaring to 1 trillion degrees, 150,000 times hotter than the core of the sun. Then I try to picture a minuscule five-dimensional black hole, which, depending on your point of view, may or may not have formed at that same spot over my head. It's all a little much for an imagination that sometimes struggles with the plot of Battlestar Galactica.

Particles unleashed by the high-energy

collisions at the RHIC collider offer a

peek at the freekish far end of physics.

I'm standing in a Battlestar-scale room at Brookhaven National Laboratory in Upton, New York, where Hallman and about 1,200 other physicists labor away as latter-day demiurges. Here in the middle of Long Island, they are re-creating the opening microseconds of the universe's existence, the time when the first particles of matter—of everything—appeared.

The building we're in straddles a small segment of the Relativistic Heavy Ion Collider, or RHIC (pronounced "Rick"), an ultrapowerful particle accelerator with a 2.4-mile circumference. For nine months of the year, a 1,200-ton detector as big as a house fills most of the room. But technicians have hauled the detector to an adjoining hangar-size area for maintenance, leaving me and Hallman free to amble just below the spot where a new form of matter exploded into being during the accelerator's recent runs. New, that is, in that it hasn't existed since the very beginning of time, or by the transcendently precise reckoning of physicists, since 10 millionths of a second after the Big Bang, 13.7 billion years ago.

That was the last time particles called quarks and gluons—the most fundamental constituents of matter—roamed free in the cosmos, and it was a brief run. After just a hundred-millionth of a second, all the universe's quarks combined in triplets—held together by gluons—to form protons and neutrons. They have been locked inside the hearts of atoms ever since, until RHIC set them loose once again.

When the gold nuclei collide in the accelerator, they explode in a fireball just a trillionth of an inch wide. Inside that nanoscale fireball, temperatures exceed a trillion degrees, mimicking conditions in the immediate aftermath of the Big Bang. The nuclei literally melt back into their constituent quarks and gluons. Then, 50 trillionths of a trillionth of a second later, the fireball cools, just as the infant universe did as it expanded, and the quarks and gluons merge once again to form protons and neutrons.

With these experiments, Hallman and his Brookhaven colleagues are discovering something extraordinary about the early universe. The quarks and gluons that coursed through the newborn cosmos—and considerably more recently, through RHIC—took the form not of a gas, as physicists expected, but of a liquid. For a few instants, a sloshing soup of quarks and gluons filled the universe.

"I like to say that our theory of the early universe is now all wet," says Bill Zajc, a physicist at Columbia University and the leader of one of the experimental teams at RHIC.

|

| In the circular tunnel at RHIC (above), |

He might have added that the theory is full of holes, little black ones from the fifth dimension, because it turns out that in a strange mathematical sense, the quarks and gluons at RHIC are equivalent to microscopic black holes in a higher-

dimensional space. Understanding just why that is so involves navigating a labyrinth of strange, heady, and heretofore seemingly unrelated theories of physics. In addition to challenging the conventional model of how the universe behaved in its earliest instants, the RHIC data also provide the first empirical support for a theory so enthralling it once had physicists dancing at a major conference. Moreover, the accelerator's results hint that string theory—the much-

ballyhooed "theory of everything," which has lately come under attack as being little more than a fanciful, if elegant, set of equations—may have something to say about how the universe works after all.

Before the physicists at Brookhaven could begin their pursuit of quarks, gluons, and hyperdimensional holes in space-time, they first had to prove that they wouldn't destroy the planet in the process. The doomsday risk never really existed, but making that clear to a worried public occupied the time of some of the world's leading physicists (see "Could a Man-Made Black Hole Swallow Long Island?" opposite page).

Once the doubts about Earth's safety had been laid to rest, the physicists at Brookhaven fired up their $500 million accelerator for the first time, in the summer of 2000. For Nick Samios, it was the culmination of two decades of work. Samios, who is now director of the RIKEN-BNL Research Center, headed Brookhaven from 1982 until 1997 and was the driving force behind the effort to build RHIC. "I'll tell you a story," he says over lunch at Brookhaven's staff cafeteria, leaning forward. The story's principals are Stalin; his chief of secret police, Lavrenty Beria; and Igor Vasilyevich Kurchatov, a leading Soviet nuclear physicist. "Stalin and Beria are discussing the Soviet Union's first atomic-bomb test. 'Who gets the award if the test is a success?' Beria asks Stalin. 'Kurchatov.' So then Beria asks, 'Who gets shot if it doesn't work?' 'Kurchatov.' I feel like Kurchatov. Anyone else could disassociate themselves from the project. I couldn't."

One gamble Samios and his colleagues made 20 years ago was to trust in Moore's law, first formulated in 1965 by one of Intel's founders, which holds that computing power doubles roughly every 18 months. The type of accelerator Samios wanted to design would generate a petabyte of data—a million gigabytes—during each run, a rate that would fill the hard drive of one of today's typical desktop PCs every few minutes. In 1985 there were no computers that could handle anything close to that. But the "if we build it, the computers will be there" strategy paid off, and Samios's dream of a Big Bang machine became a reality.

To re-create the immediate aftermath of the Big Bang, RHIC reaches higher energies than any other collider in the world. Unlike most accelerators, which smash together simple particles like individual protons, RHIC accelerates clusters of hundreds of gold atoms—with 79 protons and neutrons in each gold nucleus—to 99.99 percent the speed of light. In the resulting multiatom collisions, a melee of tens of thousands of quarks and gluons is released. They in turn form thousands of ordinary particles that can be tracked and identified.

"The physics at RHIC is complicated," Samios says with a touch of understatement. "Two big nuclei are hitting each other. Physicists are used to calculating a proton hitting a proton. We're hitting 200 nucleons with 200 nucleons [a nucleon is a proton or neutron]. With each collision we get thousands of particle tracks coming out. We had to build detectors that could count all of them. People weren't used to that. They were used to counting 50 in a collision.

"We hoped that RHIC would make great discoveries. We hoped that we'd break nuclei into quarks—the early universe was quarks and gluons, and then it cooled off and you got protons and us. We've done that. The question is–Is there something new going on? And the answer is yes."

Until the first gold atoms started making their 13-microsecond laps around RHIC's 2.4-mile-long perimeter, physicists thought they had a pretty good idea of what to expect from the collisions. The gold nuclei were supposed to shatter and form a hot gas, or plasma, of quarks and gluons. For physicists, watching the collisions in RHIC would be like watching the Big Bang unfolding before their eyes, but running in reverse—instead of a seething cloud of gluons and quarks settling down to form protons and neutrons, they would see the protons and neutrons burst open in sprays of quarks and gluons.

The universe has been around now for about 400 quadrillion seconds, but for physicists like Tom Kirk, the former associate lab director for high-energy and nuclear physics at Brookhaven, the first millionth of a second was more intriguing than any that followed.

"You may remember Steven Weinberg's book The First Three Minutes," says Kirk, referring to a classic account of the physics of the early universe. "Steve said after those first three minutes, the rest of the story is boring. Well, we could say after that first microsecond, everything else was pretty boring."

Kirk smiles and raises his eyebrows slightly, gauging whether I'm sympathetic to his remark, made, perhaps, only half in jest. When that first microsecond of eternity ended, the remainder of cosmic history unfolded with stolid inevitability. Once quarks finished clumping together as protons and neutrons, it was only a matter of time—and gravity—before the first simple atoms gathered in vast clouds to form stars and galaxies, which eventually begot us. (For an elaboration of your personal relationship with quarks, see "The Big Bang Within You," below.)

RHIC was designed to observe directly, for the first time, how quarks behave when freed from their nuclear prisons. The initial results, announced in 2005, stunned physicists everywhere. The particles released by the high-speed smashups were not bounding around freely the way atoms in a gas do but moving smoothly and collectively like a liquid, responding as a connected whole to changes in pressure within the fireball. The RHIC physicists describe their creation as a near "perfect" fluid, one that has extremely low internal friction, or viscosity. By the standards physicists use, the quarks and gluons make a much better liquid than water.

Since the similarity of quarks and gluons to water is not readily apparent to me, I take the subway to Columbia University to meet Zajc, the leader of one of RHIC's main experimental groups, hoping he'll enlighten me. "So how does one calculate the viscosity of those quarks and gluons?" he asks rhetorically. I sit silently, clueless, hiding my befuddlement behind a vigorous show of note taking. "It turns out there's a connection here to black-hole physics." That connection could be the first, long-awaited sign that string theory—which is in desperate need of evidence, any evidence, to support its ambitious claims to truth—is on the right track. The implausible-sounding connection between droplets of quarks and black holes may also vindicate a theory that once had 200 of the world's leading theorists jubilantly dancing the macarena.

Ehhhh! Maldacena!

M-theory is finished,

Juan has great repute.

The black hole we have mastered,

QCD we can compute.

Too bad the glueball spectrum

Is still in some dispute.

Ehhhh! Maldacena!

So goes Jeffrey Harvey's über-geek version of the once-ubiquitous 1996 hit. Harvey, a theoretical physicist at the University of Chicago, wrote the lyrics to honor Juan Maldacena, a young Argentine string theorist now at the Institute for Advanced Study in Princeton.

It was the summer of 1998, and Maldacena had just published a paper that to physicists bordered on the miraculous. He proposed an unexpected link between two ostensibly different theories of fundamental physics: string theory and quantum chromodynamics. String theory purports to describe all the elementary components of matter and energy not as particles but as vanishingly small vibrating strings. Photons, protons, and all the other particles are, according to this theory, just different "pitches" of vibration of these strings. If it is right, string theory would unify gravity and quantum mechanics in a single overarching framework—a goal that physicists have pursued for more than half a century. The problem is that there is no shred of experimental evidence that string theory is correct; all the arguments in its favor have been made entirely on the basis of its sophisticated mathematical structure. Direct experimental tests of string theory have thus far proved impossible, in part because strings are predicted to be so small that no conceivable particle accelerator could ever reach the energies needed to produce them.

Quantum chromodynamics, or QCD, on the other hand, is backed by decades of experiments. It describes the interactions of quarks and gluons. (Quarks come in three "colors," analogous to electric charge; hence the "chromo" in chromodynamics.) Unfortunately, unlike string theory, QCD says nothing about gravity, so physicists know they need a broader, more complete theory if they want to explain all of physics. Moreover, the equations of QCD are notoriously difficult to work with.